For marketing and advertising leaders, the rise of GDPR and CCPA has created a fundamental tension: the need for granular data to drive personalization versus the legal imperative to respect consumer privacy.

In the advertising world, compliance is often viewed as a "storage" problem - collecting consent and storing them in a database. However, under regulations like CCPA, which demands strict adherence such as responding to "Do Not Sell" opt-out requests within 15 days, storage is only the beginning. The real risk lies in integration and orchestration of this consent .

If your organization ingests privacy preferences but relies on manual filtering before activating audiences, you are operating on a precipice of exposing your organization to non-compliance to privacy regulations. Here is why the "filter-at-the-end" strategy fails marketing use cases, and how a modern governance architecture can automate and enforce compliance to mitigate non-compliance risk and honor customer consent.

The Hidden Risk: Poisoning the AI Well

Modern marketing relies heavily on AI and Machine Learning (ML) for profiling, segmentation, and lookalike modeling. A critical compliance gap exists here: Consent for data collection is distinct from consent for processing. Consent for marketing is distinct from consent from profiling.

GDPR protected customers have the right to opt-out of automated individual decision-making, including profiling. CCPA’s recent final regulations on automated decisionmaking (ADMT) will come into effect on January 1, 2027. While scope of regulations do not directly impact advertisers and marketers, CCPA’s recent updates illustrate further closing of the gap between CCPA and GDPR requirements. It is only a matter of time until the gap closes again.

GDPR does not allow you to process personal data or use this data for profiling if the customer does not consent. GDPR defines processing and profiling as:

‘processing’ means any operation or set of operations which is performed on personal data or on sets of personal data, whether or not by automated means, such as collection, recording, organisation, structuring, storage, adaptation or alteration, retrieval, consultation, use, disclosure by transmission, dissemination or otherwise making available, alignment or combination, restriction, erasure or destruction;

‘profiling’ means any form of automated processing of personal data consisting of the use of personal data to evaluate certain personal aspects relating to a natural person, in particular to analyse or predict aspects concerning that natural person’s performance at work, economic situation, health, personal preferences, interests, reliability, behaviour, location or movements;

What does this mean for marketer and advertisers? In the most conservative interpretation- if a customer opts out of processing you cannot collect, store, or analyze customer data to remain in compliance. Unless you have consent from the customer you cannout use their personal data for AI and many Advertising and Marketing use cases.

The danger of the traditional approach - simply ingesting consent data flags into your data foundation - is that a Data Scientist building a propensity model or a targeting algorithm might access raw data that still includes customers who have opted-out. Once a model is trained on non-compliant data, "unlearning" that data is technically difficult and legally perilous. To truly honor user consent, organizations must prevent the misuse of data before it reaches the modeling stage.

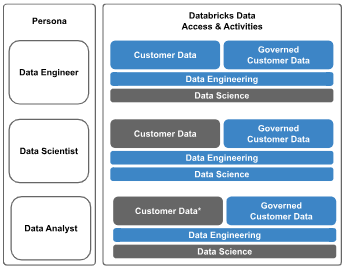

A Persona-Based Approach to Enforcing Compliance with Databricks Unity Catalog

To secure advertising/marketing workflows, you should move away from generic access rights and adopt a persona-based architecture. This ensures that the people activating data (marketers and advertisers) and the people analyzing and modeling data (data analysts and data scientists) have guardrails to mitigate non-compliance with privacy regulations.

1. The Data Engineer:

Data Engineers should be the only persona that needs access to data that could contain data from customers that do not consent. They require access to this data to build data pipelines to create required objects needed for downstream requirements.

The Guardrails: Data Engineers only have the ability to build data pipelines. They do not need access to AI tools due to their role and do not have the ability to share data to 3rd parties. Any processing consent that limits data engineering activities is handled upstream of the data foundation to prevent data engineers from inadvertently processing data of opted-out customers.

2. The Data Scientist: Safe AI by Design

Data Scientists need high-fidelity data to build accurate targeting models. However, they do not need, and should not have, access to users who have opted out of processing.

The Guardrails: Data Scientists should only interact with data where customers who do not consent to processing/profiling/etc. have been removed. Furthermore, they should be restricted to cleansed data that protects them from inadvertently accessing raw PII that isn't necessary for algorithmic training.

3. The Data Analyst: Analyst Compliance

Data Analysts provide critical insight critical to marketing and advertising. They do not need access to profiling or AI tools and like Data Scientists, do not need, and should not have, access to users who have opted out of processing.

The Guardrails: Data Analysts should only interact with data where customers who do not consent to processing/profiling/etc. have been removed. Furthermore, they should be restricted to cleansed data that protects them from inadvertently accessing raw PII that isn't necessary for algorithmic training. They also should be restricted from access AI tools within the Data and Analytics environment.

4. The Data Sharer: Controlled Activation (Not shown)

The "Data Sharer" is the persona responsible for sending audiences to third-party platforms (e.g., DSPs, social platforms). Usually this is a service principal. This is the high-risk activity since the persona is transferring data outside of your environment.

The Guardrails: This persona should have strictly governed access. Their persona should be the only one allowed to transmit data outside to mitigate accidental data exfiltration. Constraints on data access limited only to customers who have provided consent for sharing/selling of their and limits on transfer destinations are imperative. This persona should only be provided the functionality required to share data and have no ability for other processing activities.

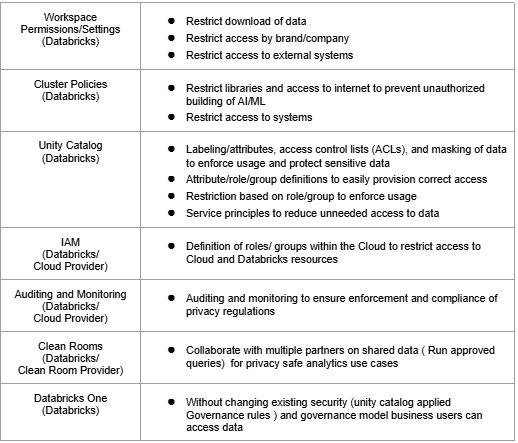

Implementing Guardrails to Enforce Compliance

Native Governance Capabilities

Databricks & your Cloud provider provide platform governance capabilities, which together can provide the required restrictions. These are proactive capabilities to reduce the risk of non-compliance and provide reactive capabilities to enable you to holistically comply with privacy regulations:

From RBAC to ABAC

Historically, marketing operations teams used Role-Based Access Control (RBAC), granting access to specific tables. In a complex marketing ecosystem, this is unscalable. The future is Attribute-Based Access Control (ABAC), enabled by platforms like Databricks Unity Catalog.

ABAC allows you to govern data based on tags rather than tables. By applying governed tags to your data assets (e.g., tagging a column as PII or a row as Region: EU), you can enforce automatic policies:

- Table Tagging to Control Processing and Profiling: If the data contains non-consenting customer data, access should remove the ability to engage in any activities that the customer(s) have not consented to.

- Row-Level Filtering for Targeting: If a dataset is tagged for "Profiling" the system can automatically filter out rows where the customer has opted-out of profiling ensuring that no opted-out user ever makes it into an activation segment.

- Dynamic Masking for Analytics: An analyst reviewing campaign performance can see aggregate trends, but columns containing email addresses or phone numbers are automatically masked based on a PII tag.

Conclusion: Governance as an Enabler for Marketing and Advertising

Removing customers who have not opted into marketing is not enough; it does not inherently prevent non-compliance with privacy regulations. For marketers and advertisers, true compliance means restricting not just what data is seen, but how it is used.

By implementing persona-based restrictions - ensuring Data Scientists cannot build models with customers who have opted-out of profiling and Marketers cannot target non-consenting customers - and leveraging automated tagging policies, organizations can enforce compliance. Marketing and advertising teams can use data with confidence, knowing that the data foundation itself is enforcing the privacy promises made to the customer.

.png)

.png)

.jpg)

.png)