Production by Default. Governed Pipelines. Measurable Quality.

Why This Matters Now

Most enterprises already have Databricks and a few promising pilots.

What they lack is the operating model that takes AI from proof-of-concept to production.

Without it:

- 80%+ of models never see production

- Governance and cost visibility come too late

- Scripts and notebooks scatter across teams

Ascend AI closes that gap. It’s a pre-built, Databricks-native operating model that unifies CI/CD, policy packs, evaluation, serving, monitoring, and cost controls, all as code.

What Is Ascend AI: MLOps + LLMOps Foundations?

Ascend AI turns your Lakehouse into a governed AI factory.

It fuses the latest Databricks stack: Delta, Unity Catalog, MLflow 3.x, Lakehouse Monitoring, Vector Search, Mosaic AI Gateway, with Koantek’s automation and best-practice templates.

Every model, agent, and workflow becomes production by default.

You Get

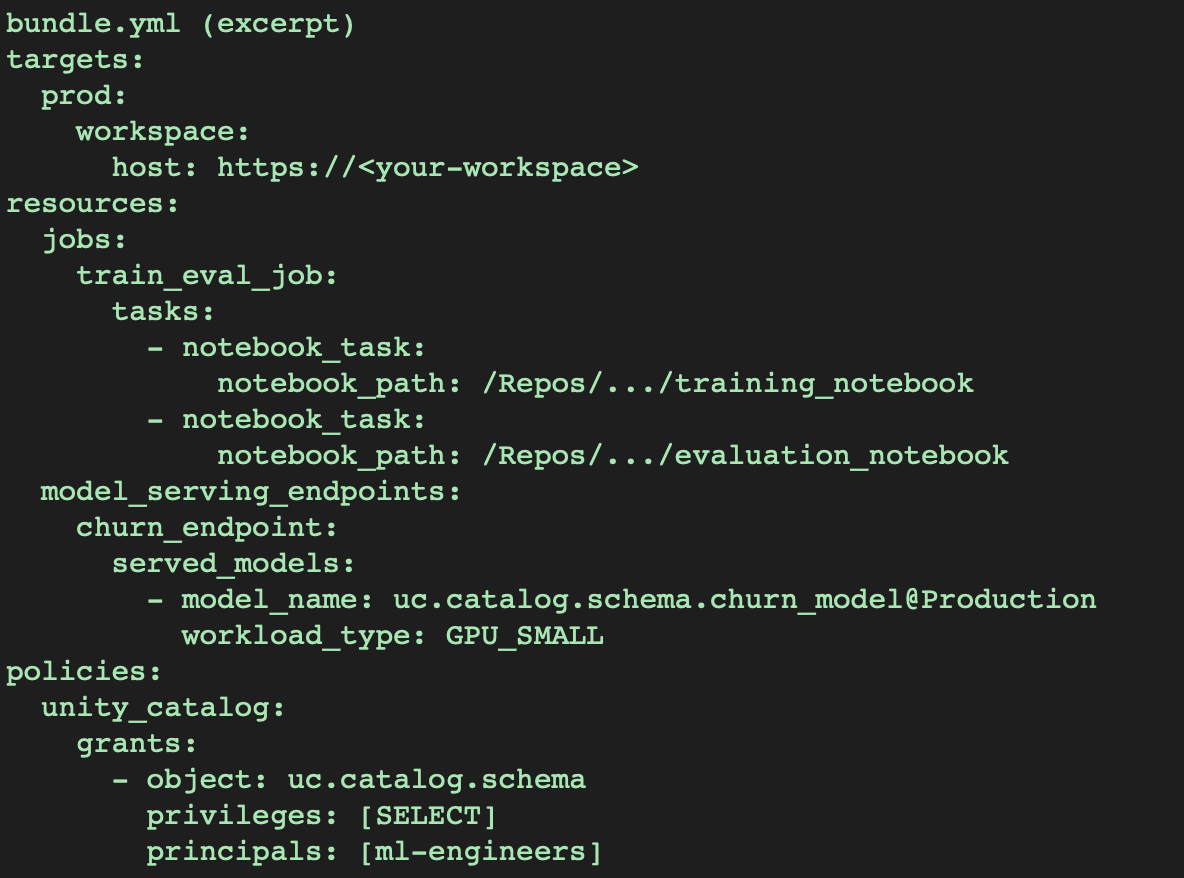

- Blueprinted CI/CD: Databricks Asset Bundles promote jobs, notebooks, and models across environments with guardrails.

- Unified Governance: Unity Catalog enforces fine-grained access, lineage, audit, and compliance (HIPAA, GDPR, SOX).

- One Platform for ML + LLM: MLflow 3.x handles model and prompt versioning, evaluation, and RAG templates.

- Observability + FinOps: Lakehouse Monitoring and Inference Tables track drift, latency, and true cost per prediction or token.

- Secure, Repeatable Provisioning: Terraform stands up governed, network-isolated Databricks workspaces in hours.

Business Impact

- Speed: First production deployment in weeks, not months (healthcare MVPs < 4 weeks).

- Cost: Up to 60% OPEX reduction by automating 80% of MLOps tasks and right-sizing infrastructure.

- Risk: Compliance on day one with UC-driven data lineage and PHI-safe access via Mosaic AI Gateway.

- Scale: Platform teams gain throughput, more use cases without more headcount.

How It Works — A Technical Deep Dive

1. CI/CD Backbone with Databricks Asset Bundles

Everything; pipelines, jobs, models, policies, is declared in bundles and promoted via quality gates. Environments stay synchronized.

2. Secure Provisioning (Day 0)

Terraform sets up workspaces, IAM roles, UC metastores, cluster policies, and private networking. Guardrails are baked in.

3. Feature + Retrieval Fabric

Lakeflow pipelines move raw → Bronze/Silver/Gold; the Feature Store registers reusable features; Vector Search manages embeddings for RAG.

4. Model / Agent Factory

Two tracks, one standard:

- ML Track: Delta-versioned datasets, evaluation via MLflow 3.x.

- LLM Track: Agent Bricks (low-code) or DSPy (code-first) with versioned prompts and AI-judge evaluation.

5. Gated Release

Automated evaluation jobs run golden tests + AI judge scoring. Only passing versions are promoted—no manual shortcuts.

6. Serving & Inference

Databricks Model Serving and Mosaic AI Gateway handle RBAC, PII scrubbing, rate limits, and detailed logs.

7. Monitoring & FinOps

Lakehouse Monitoring tracks data quality and model health.

Inference Tables log predictions and responses for drift analysis.

FinOps dashboards reveal real $/prediction and $/1K tokens—so AI spend stays predictable.

Who It’s For

- Platform Engineering / MLOps: standardize releases and governance.

- ML Engineers / Data Scientists: focus on features and metrics, not plumbing.

- CIO / CDO: gain governed AI velocity with clear ROI and risk controls.

Get Started

Ascend AI Strategy Workshop (free):

Map value to use cases and readiness in 90 minutes.

Live Demo / PoC:

Watch a governed pipeline move from dev → prod in real time.

Scope Alignment:

Receive a tailored roadmap (phases, milestones, effort) for executive buy-in.

Contact: sales@koantek.com

.png)

.png)

.png)